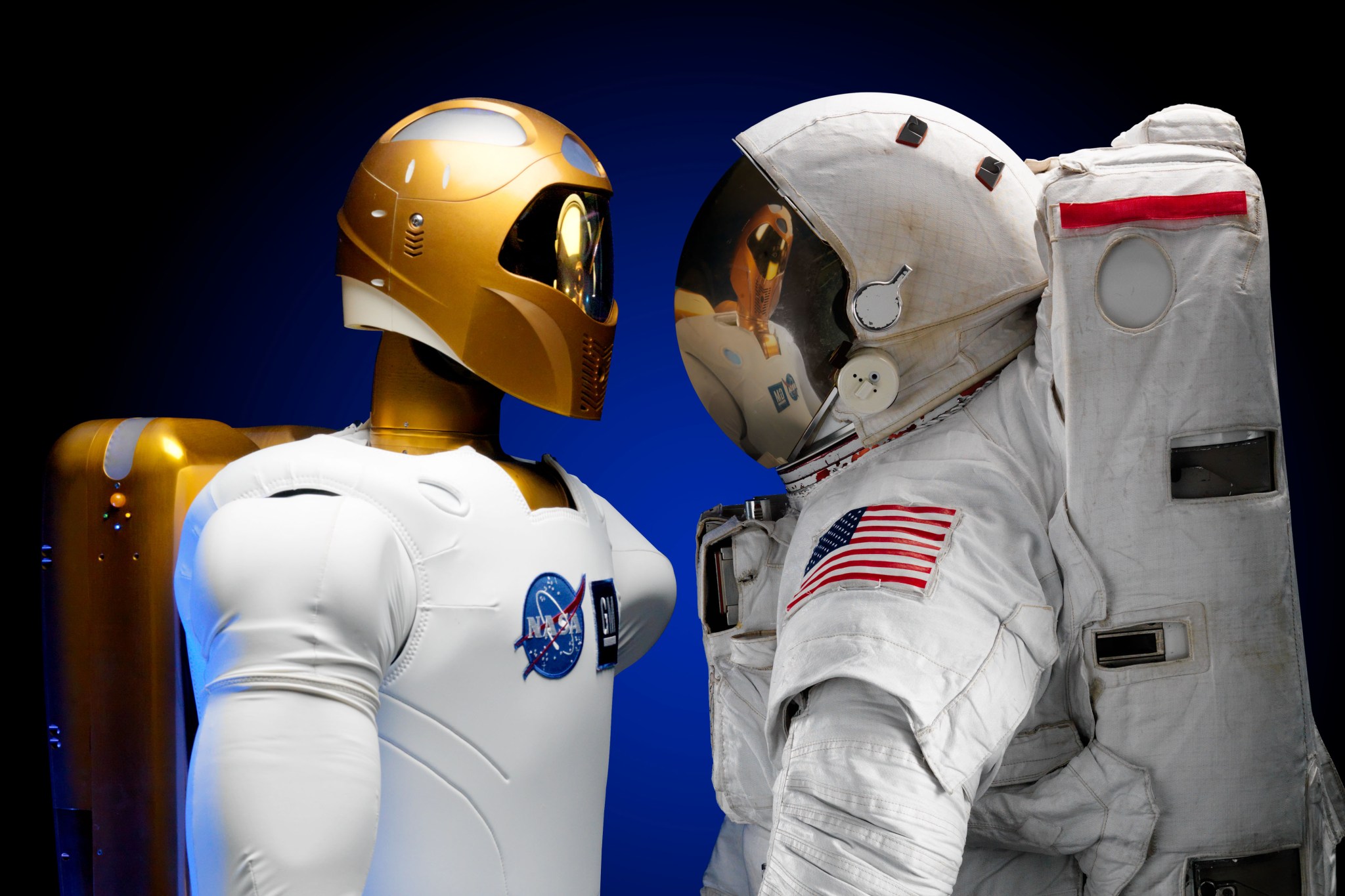

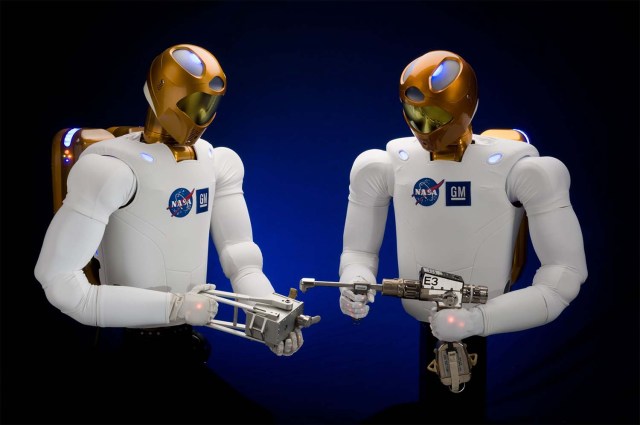

A Robonaut is a dexterous humanoid robot built and designed at NASA Johnson Space Center in Houston, Texas. Our challenge is to build machines that can help humans work and explore in space. Working side by side with humans, or going where the risks are too great for people, Robonauts will expand our ability for construction and discovery. Central to that effort is a capability we call dexterous manipulation, embodied by an ability to use one’s hand to do work, and our challenge has been to build machines with dexterity that exceeds that of a suited astronaut.

Quick Facts

Robonaut STS-133 Trailer

What is a Robonaut?

The Robonaut project has been conducting research in robotics technology on board the International Space Station (ISS) since 2012.

Recently, the original upper body humanoid robot was upgraded by the addition of two climbing manipulators (“legs”), more capable processors, and new sensors. While Robonaut 2 (R2) has been working through checkout exercises on orbit following the upgrade, technology development on the ground has continued to advance.

Read More

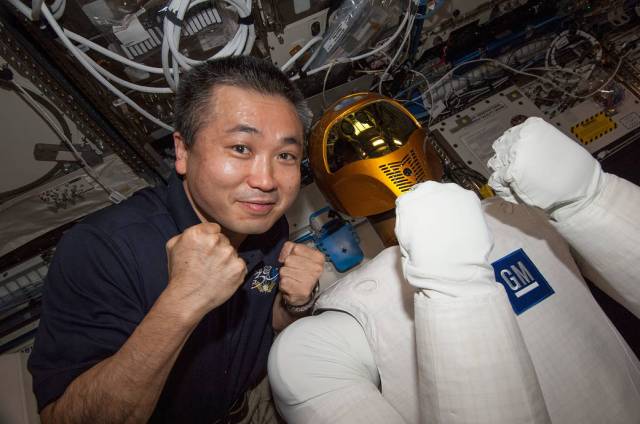

Mission to the International Space Station

On February 24th 2011, NASA launched the first human-like robot to space on space shuttle Discovery as part of the STS-133 mission to become a permanent resident of the International Space Station.

Robonaut 2, or R2, was developed jointly by NASA and General Motors under a cooperative agreement to develop a robotic assistant that can work alongside humans, whether they are astronauts in space or workers at GM manufacturing plants on Earth.

Read More

ISS Mobility Upgrade

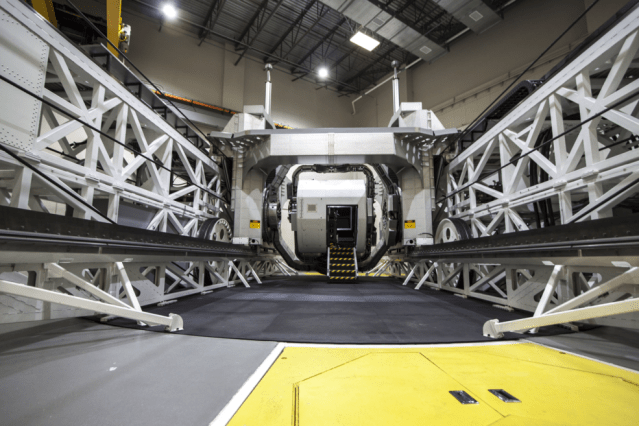

Initially deployed as a torso-only humanoid restricted to a stanchion, the R2 mobility platform was added, augmenting R2 with two new legs for maneuvering inside the ISS.

In 2010, the Robonaut Project embarked on a multi-phase mission to perform technology demonstrations on-board the International Space Station, showcasing state of the art robotics technologies through the use of Robonaut 2 (R2). The use of ISS as a test bed during early development to both demonstrate capability and test technology while still making advancements on the ground.

Read More

NASA and General Motors Partnership

NASA and GM Take a Giant Leap Forward in Robotics

Engineers and scientists from NASA and GM worked together through a Space Act Agreement at the agency’s Johnson Space Center in Houston to build a new humanoid robot capable of working side by side with people. Using leading edge control, sensor and vision technologies, future robots could assist astronauts during hazardous space missions and help GM build safer cars and plants.

Read More

Features

Explore NASA's feature articles about Robonaut 2.

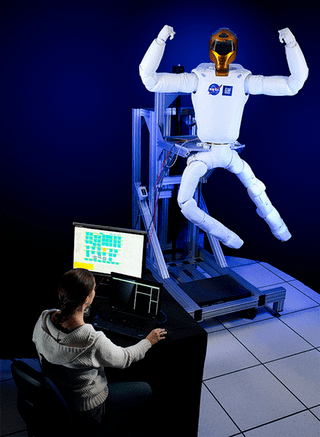

A Step Up for NASA's Robonaut: Ready for Climbing Legs

NASA has built and is sending a set of high-tech legs up to the International Space Station for Robonaut 2 (R2), the station's robotic crewmember.

NASA’s R5 aka Valkyrie

Valkyrie, a name taken from Norse mythology, is designed to be a robust, rugged, entirely electric humanoid robot capable of operating in degraded or damaged human-engineered environments.

Robonaut 1: The First Generation

The Robonaut project seeks to develop and demonstrate a robotic system that can function as an EVA astronaut equivalent.

NASA's Robonaut Legs Headed for International Space Station

NASA’s built and is sending a set of high-tech legs up to the International Space Station for Robonaut 2 (R2), the station’s robotic crewmember. The new legs are scheduled to launch on the next SpaceX commercial cargo flight to the International Space Station from Cape Canaveral Air Force Station in Florida.

Robotic Technology Lends More Than Just a Helping Hand

The Human Grasp Assist device, aka K-Glove or Robo-Glove, is a robotic glove that auto workers and astronauts can wear to help do their respective jobs better while potentially reducing the risk of repetitive stress injuries.

NASA's Ironman-Like Exoskeleton Could Give Astronauts, Paraplegics Improved Mobility and Strength

While NASA’s X1 robotic exoskeleton can’t do what you see in the movies, the latest robotic, space technology, spinoff derived from NASA’s Robonaut 2 project may someday help astronauts stay healthier in space with the added benefit of assisting paraplegics in walking here on Earth.

Robonaut Video Gallery

Watch Now about Robonaut Video Gallery